AI Misuse is a "Virus"

Plus, quitting teaching because of cheating. Plus, an old technology turns into a new "ultimate cheating device."

Issue 313

Subscribe below to join 4,136 other smart people who get “The Cheat Sheet.” New Issues every Tuesday and Thursday.

If you enjoy “The Cheat Sheet,” please consider joining the 16 amazing people who are chipping in a few bucks via Patreon. Or joining the 41 outstanding citizens who are now paid subscribers. Paid subscriptions start at $8 a month. Thank you!

AI is a “Virus” on Campus

Business Insider has the story (paywall) of students’ ongoing misuse of AI in college classes, faking the work to score an increasingly devalued degree. To be fair, the last half of that sentence is mine; Business Insider only wrote about the ongoing misuse.

One of the bullet subheaders of the BI story is:

AI-generated plagiarism is still running rampant in colleges.

I’m not sure what exactly they expected would slow it down. But you can sense the article’s tone from that.

BI starts:

When Darren Hick, a philosophy professor, first came across an AI-generated essay in late 2022, he knew it was just the start of something bigger.

Almost two years later, Hick says the use of AI among students has become a "virus."

"All plagiarism has become AI plagiarism at this point," Hick, who teaches philosophy at Furman University, told Business Insider. "I look back at the sort of assignments that I give in my classes and realize just how ripe they are for AI plagiarism."

Students were some of the earliest adopters of AI-text generators when they realized their potential to produce essays from scratch and help with assignments.

We covered the Hick, Furman story in Issue 236.

BI continues that the ongoing AI use has created:

a new atmosphere of distrust between students and professors.

Ah, yeah. When people cheat, lie, and steal, it can create a bit of distrust. I think the author has missed the point. BI goes on:

Schools and universities initially tried to combat the flood of AI-generated plagiarism by banning the technology outright. Now, many are trying to incorporate technology into curriculums and encourage students to use it responsibly.

How’s that “encourage students to use it responsibly” going?

If you think about generative AI use in academic settings as being akin to using steroids in sports, a policy that, “encourage[s] students to use it responsibly” is absurd. I cannot comprehend why anyone thinks such a policy would be successful.

The article quotes a professor who says, in the new era of AI abuse, he’s gone back to “book exams” and “in-class, handwritten assessments.”

Then, of course, BI does the obligatory nonsense:

The AI detectors on offer are also not perfect and run the risk of penalizing students who have done nothing wrong.

In its FAQs for educators, OpenAI acknowledges that there is no surefire way to distinguish between AI and human-made content. In answer to a question about whether AI detectors work, OpenAI wrote, "In short, no."

For the zillionth time — AI detectors do not punish students. They do not even accuse students any more than an airport metal detector accuses someone of trying to hijack a plane. It’s an alert that further — human — inquiry is needed. I mean, how silly is this sentence: airport scanners are also not perfect and run the risk of sending people to prison for terrorism when they have done nothing wrong.

This is not complicated.

Also, hi, Mr. Fox — quick question. Is there any way at all to keep you out of the henhouse? Mr. Fox: “In short, no.” In fact, Mr. Fox continues, “you should just leave the door open, since you can’t stop me anyway.” Us: that checks out.

I think we have all gone insane.

Anyway, Business Insider wrote about AI in college and, big shocker, it’s still happening. Because, my opinion again, no one cares enough to try to stop it.

Teachers Quitting the Classroom Over Academic Misconduct

When I spoke about academic misconduct in Oklahoma few weeks ago (see Issue 310), I met a test center supervisor who had been a professor at one of the state’s bigger, best-known schools. She taught writing and told me she quit teaching because of the cheating. She said, in fact, that her last straw was when one of her students plagiarized her, submitting work she’d written under their own name, for credit.

Then, yesterday, I ran across this article in Time with the headline:

I Quit Teaching Because of ChatGPT

It starts:

This fall is the first in nearly 20 years that I am not returning to the classroom. For most of my career, I taught writing, literature, and language, primarily to university students. I quit, in large part, because of large language models (LLMs) like ChatGPT.

The author’s point is not entirely about cheating itself but rather about how writing is an essential part of thinking and that, if students aren’t actually writing, they’re not actually learning. If anyone cares, I agree. Though that’s not entirely in the scope of what I’m doing here.

Nonetheless, the piece has some portions worth sharing, such as:

In my most recent job, I taught academic writing to doctoral students at a technical college. My graduate students, many of whom were computer scientists, understood the mechanisms of generative AI better than I do. They recognized LLMs as unreliable research tools that hallucinate and invent citations. They acknowledged the environmental impact and ethical problems of the technology. They knew that models are trained on existing data and therefore cannot produce novel research. However, that knowledge did not stop my students from relying heavily on generative AI. Several students admitted to drafting their research in note form and asking ChatGPT to write their articles.

If you’re a teacher, especially a writing teacher, I highly recommend reading the piece. I think it connects quite well something I tend to skirt by — that the issue is not just the indefensible act of cheating, it’s that the cheating blows apart every effort of teaching. And accordingly, learning. If cheating or shortcutting is going on, absolutely everyone in the process is wasting their time.

It’s also worth noting that the author calls out the plagiarism protector Quillbot, which is conveniently owned by cheating provider CourseHero, which itself now uses the Orwellian moniker Learneo:

My students also relied heavily on AI-powered paraphrasing tools such as Quillbot.

Quillbot, as the author points out, is not a learning tool. It is a “do it for me” shortcut and evidence eraser, kicking dirt over the lines of authenticity and effort. In academic settings, there is no reason whatsoever to use Quillbot.

But whatever, the piece is strong and I highly suggest checking it out.

Know Anyone Who’s Quit Teaching Because of Misconduct or Misuse of Technology?

My conversation in Oklahoma and the above Time Magazine piece have me wondering if there are others who’ve quit teaching because of misconduct or misuse of technology.

If you know someone who has left classroom instruction because of it — even in part — please let me know. I think this may be a larger story and, if it is, I’d like to write about it in a larger venue. A reply to The Cheat Sheet e-mail reaches me.

Thank you.

Old, Safe Technology Gets “Ethically Questionable” Cheating Upgrade

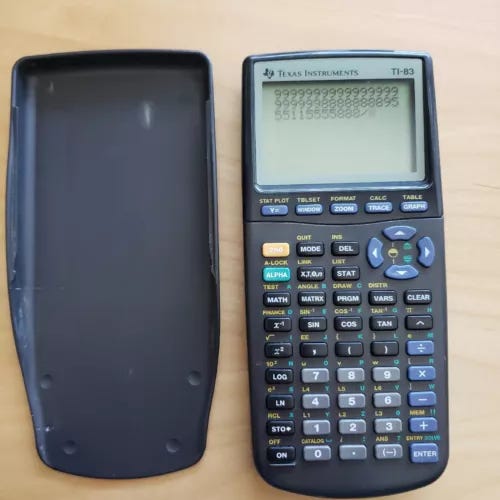

Sent in by a friend of The Cheat Sheet, this article from Hackaday, spills how a company is selling modifications to the old graphic calculator, turning it into an “ethically questionable” cheating tool:

In an educational project with ethically questionable applications, [ChromaLock] has converted the ubiquitous TI-84 calculator into the ultimate cheating device.

According to the coverage, the modifications:

allow you to view images badly pixelated images on the TI-84’s screen, text-chat with an accomplice, install more apps or notes, or hit up ChatGPT for some potentially hallucinated answers. Inputting long sections of text on the calculator’s keypad is a time-consuming process, so [ChromaLock] teased a camera integration, which will probably make use of newer LLMs image input capabilities.

And:

To prevent pre-installed programs from being used for cheating on TI-84s, examiners will often wipe the memory or put it into test mode. This mod can circumvent both.

Nice.

So, just another warning that cheaters are creative and resilient and that just about anything — even the old technology we used to trust — can be weaponized to serve people who don’t want to do the work of learning.

I only do in-class assignments now. Case studies are in class which they can do in teams of 2-4 people if they want just as they might for a take-home assignment and then their answers are submitted at the end of the class. I tell them flat out this is because I don’t trust them not to use Course Hero or ChatGPT or any other cheating website. An assignment done outside of class is useless. I am not paid to train ChatGPT or whatever how to cheat better.

good