390: Pangram on AI Detection Accuracy, Transparency

Plus, an upcoming Webinar from Cursive Technology.

Issue 390

Subscribe below to join 4,846 (+47) other smart people who get “The Cheat Sheet.” New Issues every Tuesday and Thursday.

The Cheat Sheet is free. Although, patronage through paid subscriptions is what makes this newsletter possible. Individual subscriptions start at $8 a month ($80 annual), and institutional or corporate subscriptions are $250 a year. You can also support The Cheat Sheet by giving through Patreon.

As you likely know, the primary writer and publisher of The Cheat Sheet is on summer break until August 12 or 14. Until then, readers and others have been invited to submit articles, commentary, press information, product updates — anything related to academic integrity. Contributions can be submitted to Derek (at) NovemberGroup (dot) net

This submission came in and is posted here, unedited.

Pangram Founder Writes about AI Transparency

When my co-founder, Bradley Emi, and I were grad students at Stanford studying machine learning and Artificial Intelligence, we quickly grasped that AI had the potential to do significant good, but it also had the potential to do great harm at scale. It is one thing to have a small mill of people pumping out misinformation about election campaigns and social issues or writing papers for students. It is quite another to have a tireless, limitless algorithm writing, adjusting, and inserting misinformation into every nook and cranny of the internet.

It was clear to us that with ChatGPT being available to everyone, there would be an increasing need for transparency into where AI was the creator and where humans were the creators. Knowing this would help us be more discerning in considering product reviews for example, or reading the news. Educators would also need visibility into where students were using AI not just as a binary choice of right or wrong, but to have transparency into the human/AI makeup of an essay or other written assignment.

We have written and spoken extensively on Pangram’s commitment to transparency as a company and for our research methods (see Pangram’s technical report here). We also make our platform available to academics and researchers who need its capabilities either for their research analyzing documents or to test our accuracy. Pangram is also available free for anyone for a limited number of reviews.

Here are several academic studies that have tested Pangram’s accuracy either to solely test its capabilities or, as in the case of one University of Maryland study, to assess how accurate humans can be in AI detection.

A research paper from a team at the University of Maryland found Pangram to be nearly perfect in several tests and the best system tested:

“The majority vote of expert humans ties Pangram Humanizers for highest overall TPR (99.3) without any false positives, while substantially outperforming all other detectors”

“Pangram is near perfect on the first four experiments and falters just slightly on humanized o1-Pro articles, while GPTZero struggles significantly on o1-Pro with and without humanization. The open-source detectors degrade in the presence of paraphrasing and underperform both closed detectors by large margins on average”

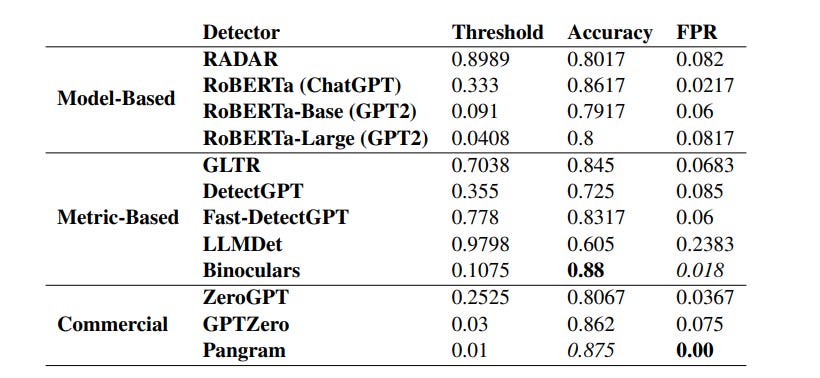

Another paper, from a team of different researchers at the University of Maryland found Pangram had the best detection accuracy of AI vs human writing (tied), while also being the only system with zero false positives:

A third study by a research team at the University of Pennsylvania and the Qatar Computing Research Institute found that Pangram’s system was the best commercial service, and second-best overall.

The study describes Pangram’s success as “extremely strong” and “surprisingly high”:

“The two best performing teams (Pangram and Leidos) achieved extremely strong performance without adversarial attacks (99.3%) and with adversarial attacks (97.7%)”

“the two winning teams for subtask B (Leidos and Pangram) getting a surprisingly high 97.7% TPR on the dataset”

The fourth study, from a team at the University of Houston, University of California, Berkeley, University of California, Irvine, and Esperanto Technologies reported similarly.

This study tested nine detectors, finding Pangram was the most accurate both before and after “back-translation” attacks – 97% and 94% – while also maintaining a false positive rate at or below 1%.

Two other commercial detection systems in the test could not maintain a false positive rate at or below 1%. One Pangram competitor shows a false positive rate as high as 8%, while a third commercial competitor shows a false positive rate as high as 24%.

Accuracy matters and our model stays accurate with new generations of LLMs (read why here), but transparency into the document itself is equally important, especially to educators. Pangram gives full transparency into where AI writing exists in a document and where it does not. Furthermore, if using the Chrome extension, the document writing process is recorded and can be viewed in a timeline, noting which segments have been typed and which have been pasted.

Dr. Susan Ray, a professor at Delaware Community College recently wrote about what this transparency means to her teaching in this Edsurge article.

She explains why it is so important that students learn to write with AI:

This knowledge gap? It’s not just technological. It’s generational, socioeconomic and institutional. And it’s growing wider by the day. As first-year writing professors at community colleges, if we don’t meet this moment with intention, we will leave our most vulnerable students behind.

Adding that:

Our students need guidance in navigating these new technologies, and if we fail to teach them how to engage with AI ethically and intelligently, we won’t just widen the skills gap, we’ll reinforce the equity gap, one many of us have spent our careers trying to dismantle.

Dr. Ray further explains that she secured a grant to give OpenAI access to her students and that she added Pangram’s AI detector so that:

Rather than leaving me to play Sherlock Holmes, scrutinizing student prose for malfeasance, Pangram’s findings offer transparency to both student and instructor. Unlike detectors I’ve used in the past, Pangram identifies subtly humanized AI-generated writing, removing the familiar crutch many students have reached for in the past to avoid the messier process of developing as writers.

Dr. Ray’s article is terrific primarily because it is a glimpse into how one educator sees the urgency for teaching with AI, but also the simultaneous need for visibility into how it is being used by students - thus having reliable, transparent AI detection technology.

We invite academics and researchers to contact us about using Pangram for their research, and we encourage you to share your experiences like Dr. Ray’s. The more examples we see, and the more we analyze the process of teaching and learning, the better we all become in adjusting to a world with Generative AI.

Author: Max Spero, CEO and co-founder of Pangram worked at Google after earning a Master’s degree in machine learning at Stanford. He and his friend, Bradley Emi, co-founded Pangram in 2023.

This notice is posted, as well, unedited:

Upcoming Webinar on Secure Assessments

Tuesday, August 26, 2025 8:00 AM - 9:00 AM EST

Academic Integrity for All: free plugins and tools to secure assessment and validate assignments in the LMS

Join us for a free webinar and panel featuring classroom practitioners, administrators, and technologists focused on assessment security and validity for Moodle LMS. This session brings participants from North America and Asia to talk about their experiences in face-to-face and online classrooms, the challenges of creating secure and valid assessment, and tools and tactics that they’ve used first hand in upholding academic integrity.

Topics will include the changing state of academic integrity in the AI-era, navigating institutional policies and technology, and leave time for audience questions.

Panelists:

Professor RJ Amador (Lead Instructor/Faculty at US Naval Academy, Howard Community College, Catholic International University)

Rollin Guyden (Director of Instructional Design & Technology at Columbia Theological Seminary)

Special focus will be on free tools for securing multiple choice tests (using a web cam) and online writing which can both be deployed locally within a Moodle site.

Organized by Cursive Technology, Inc. and eLearning23.com.

https://cursivetechnologyinc-901.my.webex.com/weblink/register/r75d0f6c3dc076b3477e819a271b70a22