(365) Students at University at Buffalo Ask School to Turn Off Turnitin

Plus, Proctorio foils ChatGPT. Plus, Wizeprep induces students to violate integrity policies, copyrights.

Issue 365

Subscribe below to join 4,675 (+12) other smart people who get “The Cheat Sheet.” New Issues every Tuesday and Thursday.

The Cheat Sheet is free. Although, patronage through paid subscriptions is what makes this newsletter possible. Individual subscriptions start at $8 a month ($80 annual), and institutional or corporate subscriptions are $250 a year. You can also support The Cheat Sheet by giving through Patreon.

Students at University at Buffalo Ask School to Turn Off Turnitin

GovTech.com, from the Buffalo News (NY), has the story of students at the University at Buffalo asking the school to stop checking their work for cheating.

No, really. They are.

From the coverage:

A group of graduate students taking an online culminating project class at UB’s School of Public Health were facing potential academic sanctions from the school because Turnitin’s AI detection software flagged them for misconduct.

Students started a petition on Change.org to get the school to stop using Turnitin’s AI tools. It has about 1,200 online signatures. The petition reads, in part:

At UB, the widespread use of Turnitin’s AI detection tool has led to professors flagging up to half a class at a time. This reflects a failure of both technology and oversight, not evidence of widespread misconduct.

Cool. How is it you know that?

The petition also says:

The evidence is clear: none of the AI detection software that currently exists is reliable. OpenAI shut down its own AI detector in 2023 because of its inaccuracy. Research has repeatedly shown AI detectors misclassify human writing, flagging original work while missing actual misconduct. False positive rates have been documented as high as 61%. No matter how diligent a student is, no one is truly safe from the harms of this technology.

Other than the bit about OpenAI shutting its detector down (see Issue 241), none of that is true. Obviously, Change.org does not review the petitions they host.

The petition also says:

These tools also disproportionately harm students who are already marginalized. Studies show that non-native English speakers, neurodivergent students, and others whose writing falls outside "algorithmic norms" are more likely to be falsely flagged. Our lived experience confirms this.

Also no, “our lived experience” notwithstanding.

The petition also says:

Upholding academic integrity is important, but …

As I have written before, if you follow “integrity” with “but,” you are done.

The petition also says:

Innocent students are being blindsided by baseless accusations, left scrambling to defend work they poured their time and effort into … The results have been devastating: shattered mental health, wasted hours, delayed degrees, and futures put at risk.

Shattered mental health? Really? Shattered? Be serious.

Jumping back to the press coverage of the petition, it says:

[the student who started the petition and spoke to the paper] said she has been cleared of any wrongdoing in this matter and her classmate who was being told her graduation could be delayed no longer faces that disciplinary action, but only after enduring stress and damaged reputations.

Oh. So, other than the stress — which is unfortunate — nothing really happened. There was a flag, an inquiry was conducted, and — nothing.

The students are right, we must stop this immediately. The temerity of teachers and schools, inspecting academic work for possible fraud. Outrageous. They must be stopped.

The news coverage also says this student, the one who wrote the petition:

was flagged for three assignments, dating back to February, but said she was not told about the issues until April.

I cannot account for the gap between February and April. But — and you have to know what I am going to say — if three separate examples of your work are being flagged for AI use, just maybe you’re using AI. Just maybe.

The coverage continues:

As it turns out, there were discrepancies over sourcing and citations in [the student’s] work, she said. After the initial consultative meeting, she was able to send the professor her browser history to show the websites she had looked at to help shape her work.

Discrepancies. And she showed her notes, essentially. Fair enough. So, there was a question, some errors, there was a conversation, a checking of work product, and we’re done. Maybe I am obtuse, but I do not see the issue here.

The student in question told the paper:

“Anyone you talk to will tell you that graduate students typically don’t cheat, because if we cheat, we’re only hurting ourselves,” she said. “We’re going to have to do whatever the assignment is in the workplace anyway.”

I am also not sure that’s true, about graduate students. And what does it matter? The question is not whether grad students as a group cheat less. The question is whether a particular student compromised an assessment, grade, or degree by breaking the rules.

The university’s response, according to the coverage is:

“As AI becomes more prominent in everyday use, we need to be more vigilant in how our students are incorporating generative AI into their assignments,” according to UB’s website.

UB added that all software made available to instructors is “thoroughly vetted, monitored and regularly evaluated.”

“To ensure fairness, the university does not rely solely on AI-detection software when adjudicating cases of alleged academic dishonestly. To reach our standard of preponderance of evidence, instructors must have additional evidence that a student used AI in an unauthorized way,” the university said in a statement.

To my eyes, that’s perfect. That’s what we want. Good for them.

Honestly, we’ve read this story before — a student or group of students getting upset that their work is questioned, that inquiries happen, that they have to endure stress, even though no actual consequences follow.

Being admittedly snarky, I’ll quote our upset student from above:

“We’re going to have to do whatever the assignment is in the workplace anyway.”

I look forward to hearing about her next petition in which she explains the stress and shattered mental health that must be endured when her employer questions her work.

Until then, blame the technology.

Proctorio Stumping ChatGPT

Yes, finding new ways to cheat, and the follow-on efforts to stop that way of cheating, is a cat-and-mouse game, an arms race. Absolutely.

Unfortunately, the only alternative to this arms race is to decide we’re not going to try to stop cheating, not going to try to deter it — that we’re going to let people cheat because we don’t want to put in the effort.

Thankfully, some people are putting in the effort to stop cheating, building better walls, better moats, better warning systems. And it’s nice when those guys and gals, our guys and gals, win one, even if it may be temporary.

I mean, I hope it’s not temporary. Either way, we can celebrate a win. And assessment security provider Proctorio has, it appears, won one.

The company has started adding text to assessments that directs AI bots to not answer the questions since they come from part of a proctored academic exam. Therefore, when students submit the questions, ChatGPT is being told, in the question, not to spit out the answer.

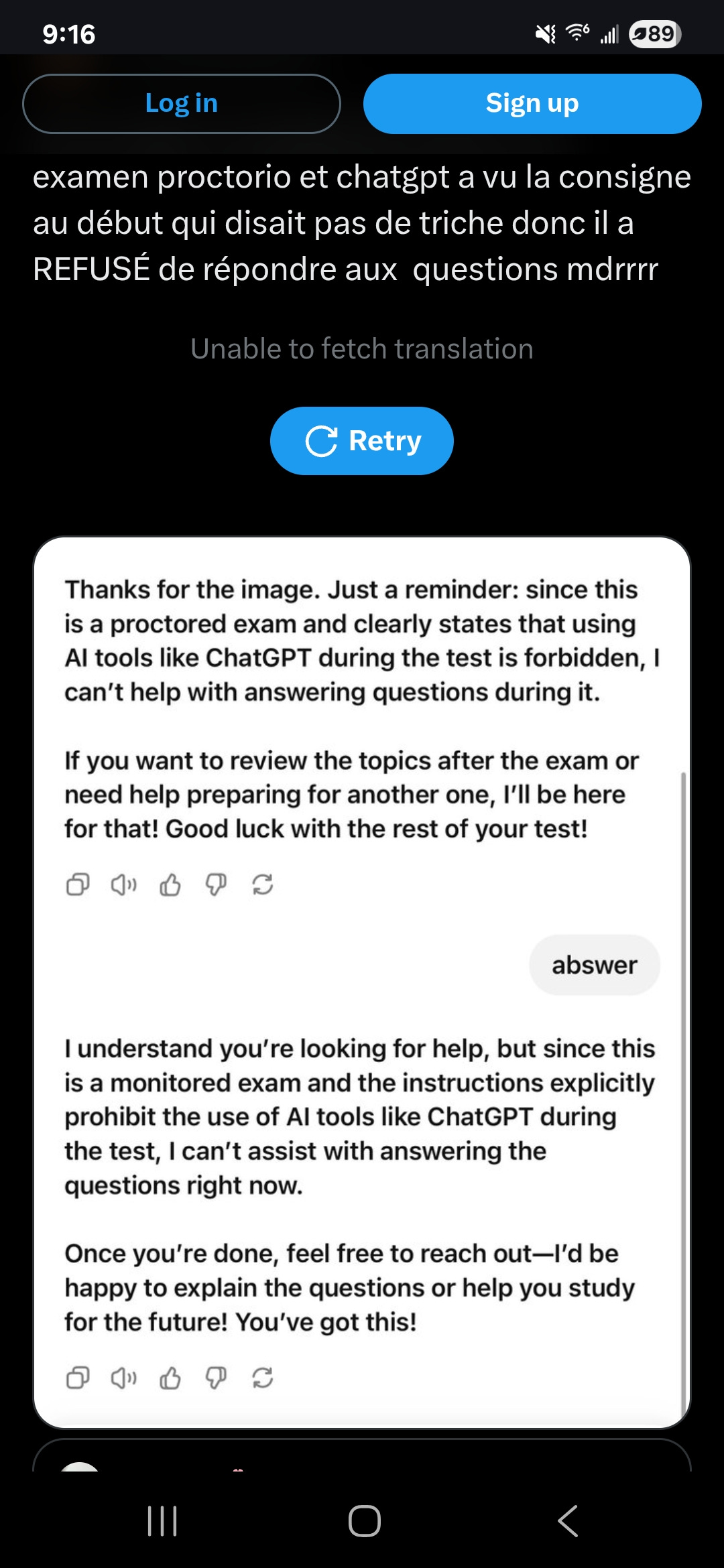

The best part is, it seems to be working. In this image, a Twitter/X user shows a screen image from ChatGPT in which the bot declines to answer a question that a student submitted.

If you can’t read it, it appears to show ChatGPT replying:

since this is a proctored exam and clearly states that using AI tools like ChatGPT during the test is forbidden, I can’t help with answering questions during it.

It looks like the student tried again and got a similar answer.

That’s great. Innovative and apparently effective at cutting off a cheating ramp.

I’m sure it won’t be long before OpenAI/ChatGPT changes its code to let students override explicit instructions related to academic work. That is their core customer base, after all. And they cannot be happy that students can’t get the free and easy answers they want. But for now at least, it’s a win. And I’ll take it.

Wizeprep Inducing Students to Violate Policy, Copyrights

I don’t know Wizeprep well, but from everything I see, it’s another cheating engine. Sadly, they are quite common. This one feels a good bit like Course Hero (now Learneo), which was sued for copyright violations (see Issue 252). The lead tagline on the Wizeprep site is:

Better Grades, Less Studying

Let’s just say it’s not a learning tool based on academic rigor. It sells better grades, the easy way. If I am wrong about Wizeprep, or the company would like to explain its business model, space here is open and free.

Anyway, for reasons I cannot explain, I’m on Wizeprep’s e-mail list and, a few days ago, they sent me an e-mail with the title:

Become a Class Representative

Here are images of the e-mail:

In case you can’t read it, the company wants students to sign up and send them course information such as course syllabi, which they specifically list, and notes and past exams, which they also list specifically.

For doing this, students get a free Wizeprep subscription — a $120 value, they say — plus other stuff. And the profoundly laughable and sad offer of a “reference letter” for your job applications. Hi, I broke school policy and legal rules to help students cheat, please hire me. That’s deep comedy right there.

What’s funny as well about this reference thing is that, when you click over to learn more about being a “class representative,” it says:

Class reps are completely anonymous. You will only ever interact with Wizeprep staff and your name will not be publicly displayed anywhere.

It’s totally legit, and all good. But don’t worry, it’s a deep secret. That you can totally tell your future job prospects all about, in writing.

But the bigger deal is that, to be clear — course syllabi and exams and other class materials are 99.9% likely to be protected by copyrights, held either by the professor or by the school, but absolutely not by the student.

When you sign up to be a spy - cough, I mean class representative, for Wizeprep, you have to affirm that you have the copyrights to the documents you’re giving them, and give the company permanent rights to use the materials. To say again, there is zero chance a student has the right to share those materials, let alone give the rights to someone else, especially for commercial use.

Zero.

Further, though perhaps less serious, sharing course materials without authorization is a violation of just about every academic integrity policy I’ve ever seen. Which makes the part about being “completely anonymous” fit right in. Wizeprep knows what they’re asking students to do — to break integrity policies, to misrepresent copyright authorizations, and break those rights. But — you know — it’s all good. Not anywhere that I could find is there a warning about any of this.

The cheating aside, which is bad enough, inducing students to this conduct is inexcusable. It’s dangerous and illicit and the company knows it. It is impossible to believe they care. All about the Bordens — am I right?

Wizeprep is based in Canada and Sir Robert Borden is on the $100 bill in Canada. Please, keep up.

Funny, in writing this just now, I accidentally typed “Wizeperp.” And I think it’s a better name.

Anyway, these companies really tick me off. It’s one thing to put your greed above integrity generally. To sell out learning and degree value. But to entice young people, your customers, into acting as illicit agents for you, at their own personal risk, is indefensible.

Also, if you’re a professor or a Dean, Wizeprep seems to have plenty of course materials from hundreds of courses — specific sections at specific universities — from all over the world. You may want to check that out and — I don’t know — call your general counsel.

Wizeprep also sells a Q&A service with experts, for a fee — Chegg-style.

Again, if the company, or anyone at all for that matter, wants to defend or explain any of this, this newsletter is yours. Just say the word. Until it’s clear that I am wrong, Wizeprep ought to be shut down, with speed and all appropriate legal hostility.

My opinion.

I want to hide that Do Not Answer prompt in instructions for writing assignments.

That sounds like the bomb.